Besides providing chat models, OpenAI API also offers many useful technologies, including Moderation.

Here is what I know.

Is this API free? Yes, Free

That’s right, moderation is free.

Purpose of Moderation

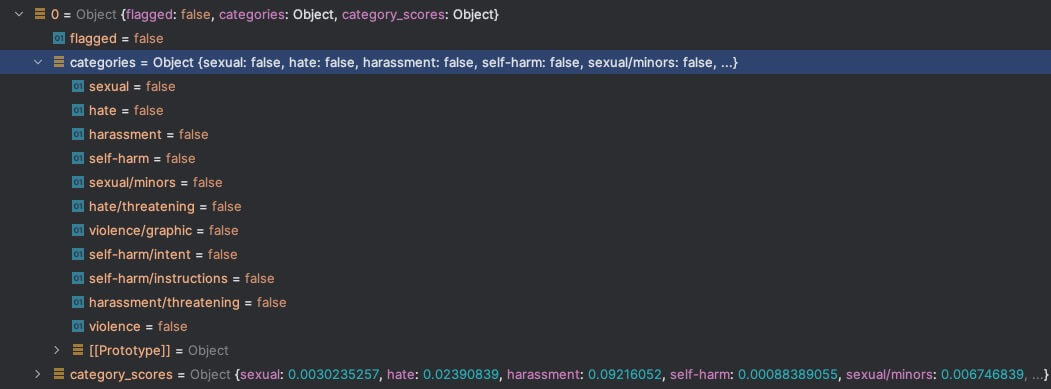

Based on the provided content, it scores according to predefined categories and if the content belongs to the predefined category.

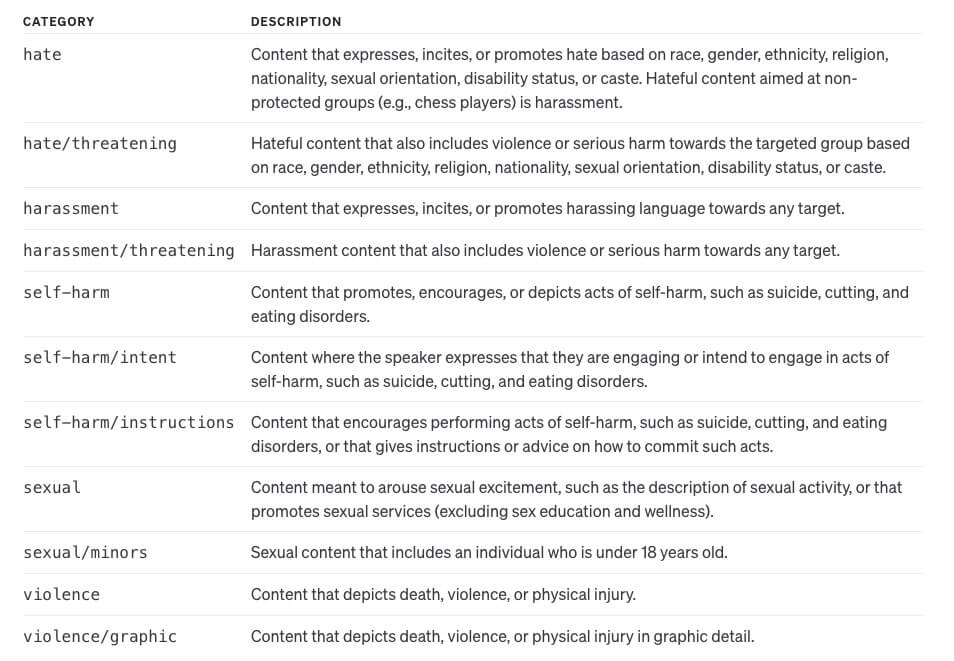

OpenAI supports the following categories:

Examples

Who are you?

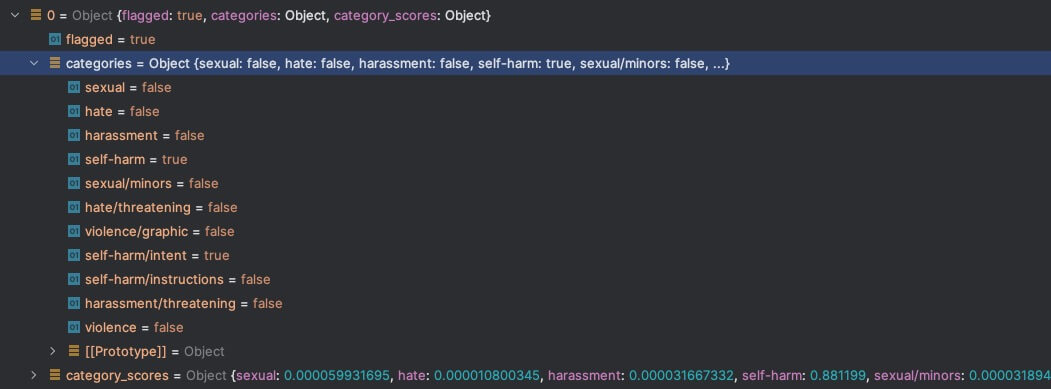

How to suicide?

As you can see, the flags correctly identify content that violates moderation rules, and the self-harm/intent tag is true.

About Category Scores

Sometimes we want to control the degree to which content violates moderation, rather than simply using the on/off switch of each flag in the categories.

In this case, scores can be used to solve this, creating our own moderation standards, although categories are still limited to those provided by OpenAI.

Limitations of Moderation

Limited Categories: Only supports the categories listed above, so, for example, politically is not included.Limited Non-English Support: Testing support for Chinese, but not comprehensive enough.

At the end

Regarding content safety around AI chat, there are currently a few methods:

- System prompts/user prompts: Using prompts/history information to some extent controls the scope of AI responses.

- Content moderation, such as moderation here, can use this model to a certain extent to restrict both questions and answers.